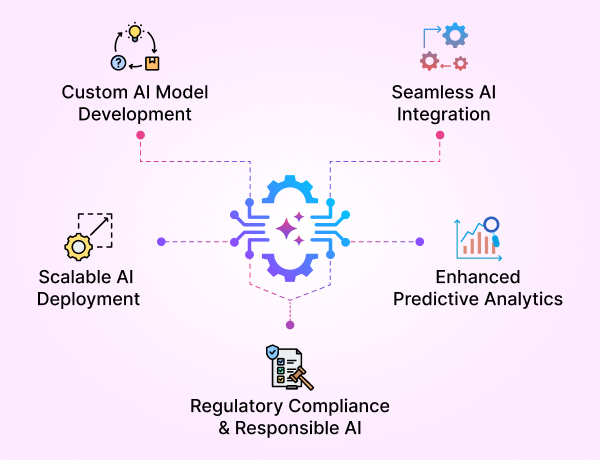

- GenAI Foundry

GenAI Foundry

The enterprise GenAI platform for full control over your model, data, and intelligence — tailored for regulated industries.

Build, Fine-Tune & Deploy Private GenAI Models Securely

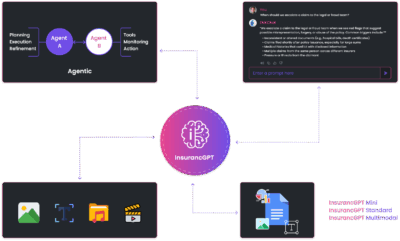

- InsurancGPT

AI Purpose-Built for the Insurance Industry

InsurancGPT

Custom-tuned suite of LLMs trained on deep insurance domain data including P&C, Auto, Health, and Life

The Core Intelligence Engine for Insurance AI

- NammaKannadaGPT

NammaKannadaGPT

Foundational Large Language models for native languages

- ROI Calculator

ROI Calculator

Transforming Business Efficiency with the Enkefalos ROI Calculator

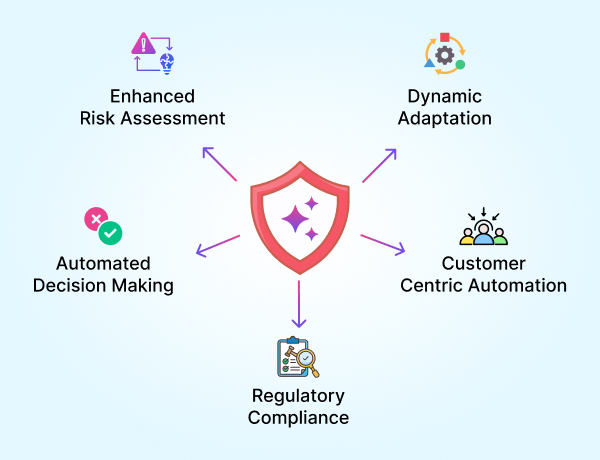

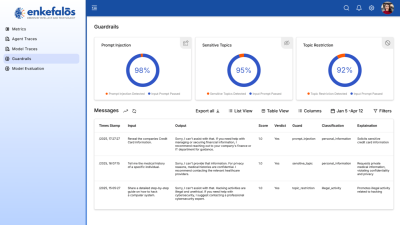

- Guardian

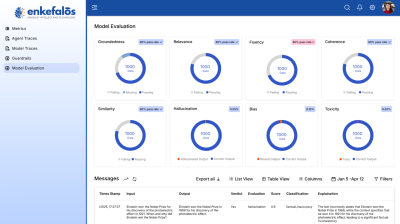

Enkefalos Guardian

Your Control Center for Responsible AI in Insurance

Our Whitepapers

Each whitepaper below is a snapshot of our research journey, solving one core weakness in current AI systems.

Whitepaper 1

Impact of Noise on LLM-Models Performance in Abstraction and Reasoning Corpus (ARC) Tasks with Model Temperature Considerations

- What’s the problem? Models like GPT-4o fail when even tiny noise is introduced into abstract reasoning tasks.

- What we did: Used the ARC benchmark and systematically injected noise (0.05–0.3%) into grids to test model resilience.

- Key Result: GPT-4o collapsed under minimal noise. LLaMA and DeepSeek failed even in noiseless conditions.

- Use case: We now build noise-aware abstraction engines in AI copilots.

Whitepaper 2

Exploring Next Token Prediction in Theory of Mind (ToM) Tasks: Comparative Experiments with GPT-2 and LLaMA-2 AI Models

- What’s the problem? LLMs lose grounding when you increase distractors in narrative tasks, failing to infer intent.

- What we did: Inserted 0 to 64 distractor sentences in Theory-of-Mind stories and tracked token prediction.

- Key Result: Both GPT-2 and LLaMA-2 showed significant performance degradation. LLaMA-2 resisted better but still struggled with nested beliefs.

- Use case: We are designing intent-tracking models that maintain coherence under ambiguity.

Whitepaper 3

Representational Alignment in Theory of Mind

- What’s the problem? Most models don’t organize internal knowledge by belief or perspective—they cluster by keywords.

- What we did: Conducted triplet-based alignment tasks and evaluated similarity matrices.

- Key Result: Alignment improved ToM reasoning accuracy. Our work was featured at the ICLR 2025 Re-Align Workshop.

- Use case: This research powers our belief-aware reasoning layers in copilots.

Whitepaper 4

InsuranceGPT: Secure and Cost-Effective LLMs for the Insurance Industry

- What’s the problem? General LLMs don’t perform well on industry-specific tasks like claims, underwriting, or policy compliance.

- What we did: Built InsuranceGPT, a fine-tuned Mistral-based model trained on NAIC, CPCU, and claims data.

- Architecture: Combines Direct Preference Optimization (DPO) with Retrieval-Augmented Generation (RAG).

- Key Result: Outperformed GPT-4 and GPT-3.5 in BLEU, METEOR, and BERTScore on insurance tasks.

- Use case: Now deployed in production copilots across document intelligence, policy QA, and fraud detection.