- GenAI Foundry

GenAI Foundry

The enterprise GenAI platform for full control over your model, data, and intelligence — tailored for regulated industries.

Build, Fine-Tune & Deploy Private GenAI Models Securely

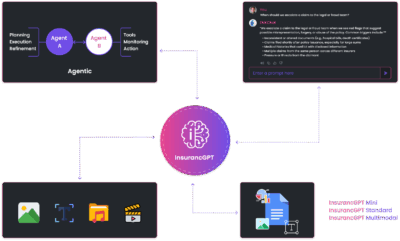

- InsurancGPT

AI Purpose-Built for the Insurance Industry

InsurancGPT

Custom-tuned suite of LLMs trained on deep insurance domain data including P&C, Auto, Health, and Life

The Core Intelligence Engine for Insurance AI

- NammaKannadaGPT

NammaKannadaGPT

Foundational Large Language models for native languages

- ROI Calculator

ROI Calculator

Transforming Business Efficiency with the Enkefalos ROI Calculator

- Guardian

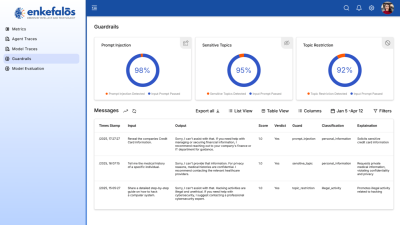

Enkefalos Guardian

Your Control Center for Responsible AI in Insurance

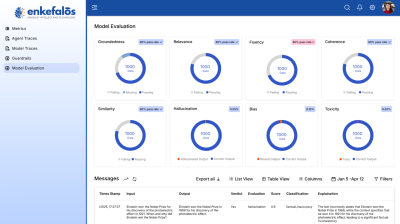

Evaluating Large Language Models – Evaluation Metrics

In the field of AI, evaluation metrics serve as an essential tool to navigate through the quality and performance of language models. These metrics are very useful in gauging how well a language model (ex: Mistral, GPT-4) aligns with human-like understanding for using these models across diverse tasks. Just as tests in school help assess a student’s grasp of a subject, evaluation metrics measure a model’s proficiency in language tasks. Whether it’s writing assistance, information retrieval, or commercial or personal use of these language models, as shown in the image below, we need to know how effectively a language model is performing.

Questions such as,

1. Is the writing assistance provided by the model coherent and contextually relevant?

2. Does the information retrieval return accurate and useful results?

3. In commercial applications, are customer support interactions handled by these models smoothly?

4. For personal use, does the model facilitate efficient problem-solving or provide reliable support? Evaluation metrics help us answer these questions.

5. Is the model output statistically significant or only due to chance?

For instance, consider writing assistance, metrics such as BLEU, ROGUE, and meteor might evaluate the semantic alignment of model-generated text with human references, important in creative writing and technical documents. In information retrieval, precision and recall are often considered. In a commercial setting, for example, a model could be guiding medical diagnosis, and metrics may involve evaluating the accuracy of the model, including perplexity and F1-score. Tests such as McNemar’s test are used to assess the statistical significance of the output of the model.

Here are the evaluation methods in detail.

Metrics for Evaluating Large Language Models

Automatic Evaluation Metrics:

- Exact Match (EM): In the LLM setting, EM measures the percentage of generated texts that exactly match the reference texts

It's calculated as:

EM = (Number of exactly matching generated texts) / (Total number of generated texts)

For example, if an LLM generates 100 sentences and 20 of them exactly match the corresponding reference sentences, the EM score would be 20/100 = 0.2 or 20% - F1 Score: To compute the F1 Score for LLM evaluation, we need to define precision and recall at the token level. Precision measures the proportion of generated tokens that match the reference tokens, while recall measures the proportion of reference tokens that are captured by the generated tokens.

Precision = (Number of matching tokens in generated text) / (Total number of tokens in generated text)Recall = (Number of matching tokens in generated text) / (Total number of tokens in reference text)

F1 = 2 * (Precision * Recall) / (Precision + Recall)

For example, let's say the LLM generates the sentence "The quick brown fox jumps over the lazy dog" and the reference sentence is "A quick brown fox jumps over the lazy dog". The precision would be 7/9 (7 matching tokens out of 9 generated tokens), and the recall would be 7/10 (7 matching tokens out of 10 reference tokens). The resulting F1 score would be 2 * (7/9 * 7/10) / (7/9 + 7/10) ≈ 0.778. - BLEU (Bilingual Evaluation Understudy): BLEU is a widely used metric for evaluating machine translation and text generation systems]. It calculates the geometric mean of n-gram precision scores (usually up to 4-grams) and applies a brevity penalty to penalize short-generated texts. The BLEU score ranges from 0 to 1, with higher values indicating better performance.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): ROUGE is a set of metrics commonly used for evaluating automatic summarization. It measures the overlap of n-grams (usually unigrams and bigrams) between the generated summary and reference summaries. The main variants are ROUGE-N (n-gram recall), ROUGE-L (longest common subsequence), and ROUGE-S (skip-bigram co-occurrence).

- METEOR (Metric for Evaluation of Translation with Explicit Ordering): METEOR is another metric used for evaluating machine translation and text generation. It considers not only exact word matches but also stemming, synonyms, and paraphrases. METEOR computes a weighted harmonic mean of precision and recall, giving more importance to recall. It also includes a fragmentation penalty to favour longer consecutive matches.

Confidence Level Metrics:

- Expected Calibration Error (ECE): ECE measures the difference between a model’s confidence and its actual accuracy. It’s calculated by partitioning predictions into bins based on confidence and computing the weighted average of the difference between average confidence and accuracy in each bin.

- Area Under the Curve (AUC): AUC evaluates the model’s ability to discriminate between classes. It plots the True Positive Rate (TPR) against the False Positive Rate (FPR) at various threshold settings. A higher AUC indicates better performance.

Qualitative Metrics:

- Fairness: Ensuring the model treats all demographics equally is crucial. Techniques like counterfactual fairness and equalized odds help assess and reduce bias.

- Robustness: Models should always maintain performance under distribution shifts or adversarial attacks. Metrics like accuracy on perturbed inputs and adversarial accuracy help in robustness.

Human Evaluation:

While automatic metrics provide quick feedback, human evaluation offers deeper insights, yet this requires manual evaluation which might be time and resource-consuming. Methods include:

- Likert Scale Ratings: Annotators rate the model’s outputs on a scale (e.g., 1-5) for qualities like fluency, coherence, and relevance.

- Comparative Evaluation: Judges compare outputs from different models, choosing the better one.

- A/B Testing: Users interact with the model in real-world scenarios, providing feedback on their experience.

GPT-4 as a Judge:

Using GPT-4 as an evaluation tool for assessing the quality of outputs from other language models is a novel and promising approach. GPT-4’s advanced language understanding and generation capabilities make it well-suited for this task. By providing GPT-4 with the output from another model and a carefully crafted prompt (example provided below), it can analyze the text and provide insights into various details of its quality. One recent paper that explores this idea is G-EVAL: NLG Evaluation using GPT-4 with Better Human Alignment . In this work, the authors propose using GPT-4 to evaluate the quality of text generated by other models. They design a set of prompts that elicit GPT-4’s judgment on aspects like fluency, coherence, relevance, and overall quality.

For example, the prompt

Evaluate Coherence in the Summarization Task

You will be given one summary written for a news article.

Your task is to rate the summary on one metric. Please make sure you read and understand these instructions carefully. Please keep this document open while reviewing, and refer to it as needed.

Evaluation Criteria:

Coherence (1-5) - the collective quality of all sentences. We align this dimension with the DUC quality question of structure and coherence whereby "the summary should be well-structured and well-organized. The summary should not just be a heap of related information, but should build from sentence to sentence to a coherent body of information about a topic."

Evaluation Steps:

Read the news article carefully and identify the main topic and key points.

Read the summary and compare it to the news article. Check if the summary covers the main topic and key points of the news article, and if it presents them in a clear and logical order.

Assign a score for coherence on a scale of 1 to 5, where 1 is the lowest and 5 is the highest based on the Evaluation Criteria.

Example:

Source Text: {{Document}}

Summary: {{Summary}}

Evaluation Form (scores ONLY):

Coherence:

Statistical Significance Tests: When comparing the performance of different models, it’s crucial to determine if the observed differences are statistically significant or merely due to chance. Statistical significance tests help make this assessment.

- McNemar’s Test: This test is used for comparing two models on a binary classification task. It considers the discordant pairs (cases where the models disagree) and calculates a test statistic based on the chi-squared distribution.

- Wilcoxon Signed-Rank Test: For evaluating models on continuous or ordinal metrics (e.g., perplexity, BLEU), the Wilcoxon signed-rank test is appropriate. It compares the ranks of the differences between paired observations.

- Bootstrap Resampling: Bootstrapping involves repeatedly sampling from the test set with replacement to create multiple subsamples. The models are evaluated on each subsample, and the distribution of the evaluation metric is analyzed to estimate confidence intervals and assess significance.

Other tests also include such as:

Linguistic Analysis: Evaluating the linguistic properties of the generated text provides insights into a model’s language understanding and generation capabilities.

- Grammaticality: Tools like the Corpus of Linguistic Acceptability or the Grammaticality Judgment Dataset can be used to assess the grammatical correctness of generated sentences.

- Coherence: Metrics such as the Entity Grid or the Discourse Coherence Model evaluate the coherence and logical flow of generated text by analyzing entity transitions and discourse relations.

- Diversity: Measuring the diversity of generated text helps ensure that the model is not simply memorizing and reproducing training data. Metrics like Self-BLEU or Distinct-N quantify the uniqueness of generated tokens or n-grams.

Continuous Evaluation:

As language models are deployed in real-world applications, continuous evaluation becomes essential to monitor their performance over time and adapt to evolving user needs.

- Online Learning: Incorporating user feedback and interactions into the model’s training process allows for continuous improvement. Techniques like active learning and reinforcement learning can be employed to update the model based on real-world data.

- Concept Drift Detection: Monitoring the model’s performance for concept drift helps identify when the data distribution has shifted, and the model’s predictions become less accurate. Techniques like adaptive windowing and ensemble learning can help detect and mitigate concept drift.

- Explainable AI: Providing explanations for the model’s predictions enhances transparency and trust. Techniques like attention visualization [39] and feature importance analysis can help users understand the factors influencing the model’s outputs.

In conclusion, evaluating language models is a complex and multi-task task that requires a comprehensive approach. Automatic evaluation metrics such as Exact Match (EM) and F1 score provide a quick and automatic assessment of a model’s performance, while confidence level metrics like Expected Calibration Error (ECE) and Area Under the Curve (AUC) help gauge the model’s certainty and proportion.

However, these quantitative measures do provide a comprehensive evaluation. Qualitative metrics, including fairness and robustness, are important for ensuring that models behave ethically and maintain performance under difficult conditions. Fairness metrics help identify and mitigate biases, ensuring that the model treats all demographics equally. Robustness metrics, on the other hand, evaluate the model’s ability to handle distribution shifts and adversarial attacks.

Human evaluation plays an important role in assessing language models, as it provides insights that automatic metrics may overlook. Techniques like Likert scale ratings, comparative evaluation, and A/B testing allow for a more nuanced understanding of the model’s outputs and user experience. These methods can uncover subtle differences in fluency, coherence, and relevance that are difficult to capture through automated means.

The idea of using advanced language models like GPT-4 as judges is an intriguing prospect. By utilizing their language understanding capabilities, these models could potentially provide a more sophisticated evaluation of other models’ outputs. However, this approach requires careful consideration of potential biases and the alignment between the judging model’s preferences and human values.