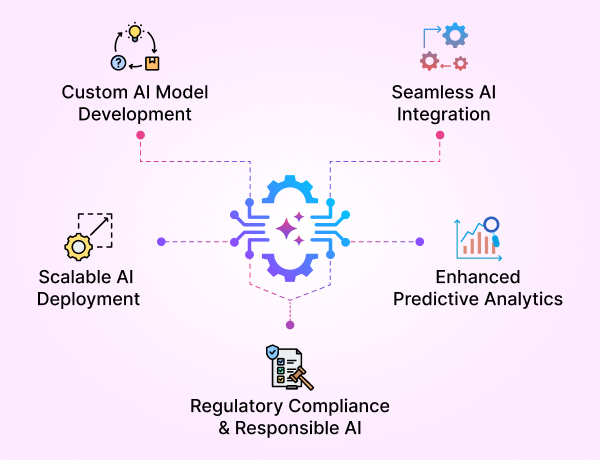

- GenAI Foundry

GenAI Foundry

The enterprise GenAI platform for full control over your model, data, and intelligence — tailored for regulated industries.

Build, Fine-Tune & Deploy Private GenAI Models Securely

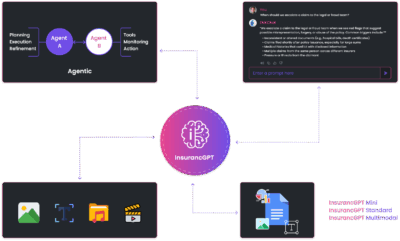

- InsurancGPT

AI Purpose-Built for the Insurance Industry

InsurancGPT

Custom-tuned suite of LLMs trained on deep insurance domain data including P&C, Auto, Health, and Life

The Core Intelligence Engine for Insurance AI

- NammaKannadaGPT

NammaKannadaGPT

Foundational Large Language models for native languages

- ROI Calculator

ROI Calculator

Transforming Business Efficiency with the Enkefalos ROI Calculator

- Guardian

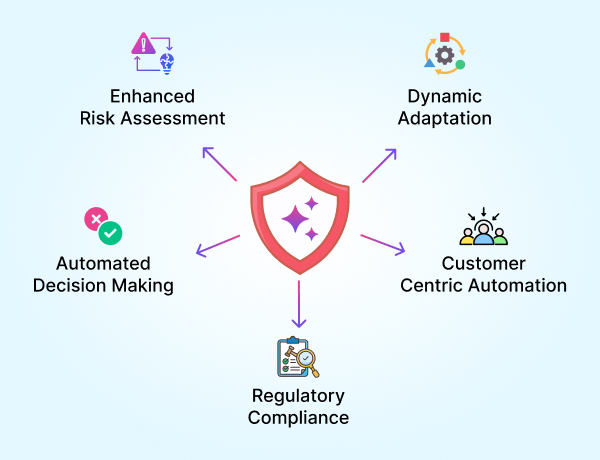

Enkefalos Guardian

Your Control Center for Responsible AI in Insurance

Security, Governance & Compliance for Private GenAI in Regulated Enterprises

How to deploy, operate, and audit LLMs you truly own without compromising risk, cost, or control.

Executive Summary

Owning your Large Language Model (LLM) changes the game: you control data, models, and IP. But ownership also shifts responsibility for security, governance, and compliance onto you. This article is your blueprint for doing that right, turning a powerful model into a safe, auditable, continuously improving enterprise system. We’ll cover the risks to manage, the architecture to adopt, the controls to enforce, and the artifacts to keep for auditors. Finally, we’ll show where GenAI Foundry fits in—so you can move fast and meet the bar regulators expect.

1) What risks are we managing?

A practical taxonomy for leaders:

- Data Risk: PII/PHI leakage, residency violations, uncontrolled data lineage.

- Model Risk: Hallucinations, bias, drift, over-fitting, unapproved changes.

- Operational Risk: Outages, malformed prompts, unsafe actions, vendor lock-in.

- Compliance Risk: Gaps against HIPAA/GDPR/NAIC/AI Act/SOC2/ISO controls.

- Third-Party Risk: Inadvertent data egress to external APIs/services.

The principle: No data leaves your boundary. All processing, training, evaluation, and serving happen inside your cloud/on-prem environment with full observability.

2) The Governance Model (who does what)

Set roles and RACI up front:

- Data Owner – Approves datasets, retention, residency, consent basis.

- Model Owner – Accountable for model performance and deployment approvals.

- Security & Compliance – Defines guardrails, audits logs, signs off on releases.

- Domain Leads (UW/Claims/Fraud/Actuarial) – Define rules, validate outputs.

- Ops/Platform – Runs pipelines, environments, and SLAs.

- HITL Reviewers – Underwriters/Adjusters who approve flagged outputs.

Decide once where signoffs are required (e.g., production releases, rule updates, high-risk feature toggles).

3) Architecture Blueprint (defense-in-depth)

A layered design that maps cleanly to controls:

A. Boundary & Identity

- Private networks, private endpoints, no public ingress to model endpoints.

- Single sign-on (SSO) + least-privilege RBAC/ABAC; break-glass accounts gated and logged.

- Just-in-time access for admin actions; short-lived credentials.

B. Data Plane

- Approved connectors (policy admin, claims, DMS, data lake) with read scopes only as needed.

- Ingestion includes classification, DLP, and optional redaction before training/serving.

- Encrypted at rest (KMS/HSM) and in transit (TLS); data residency pinned to approved regions.

- Dataset versioning with lineage: source → preprocess → train/val/test splits.

C. Training & Fine-Tuning Plane

- Isolated compute nodes for training; no outbound internet.

- Parameter-efficient tuning for cost/speed; immutable artifacts in a registry.

- Reproducible jobs (declarative configs), deterministic random seeds, and signed images.

D. Evaluation & Alignment Plane

- Automated metrics (BLEU/ROUGE/BERTScore/perplexity) + domain benchmarks (e.g., ACORD completeness, claims extraction accuracy).

- Human-in-the-Loop (HITL) reviews for flagged items.

- Preference data pipelines for RLHF/DPO alignment; approval workflow.

E. Serving & Integration

- Scalable inference with rate limits, quotas, and content filters.

- Business-rule validation and safety filters on inputs/outputs.

- Write-back adapters that push validated records into internal/external systems (policy admin, claims, CRM)—with transaction logs.

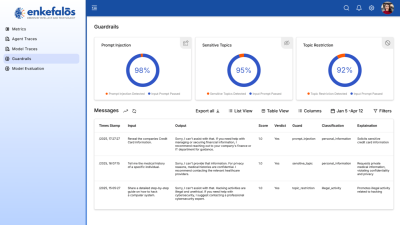

F. Observability & Audit

- Centralized logs: prompts, outputs, rules applied, confidence, HITL actions, model version.

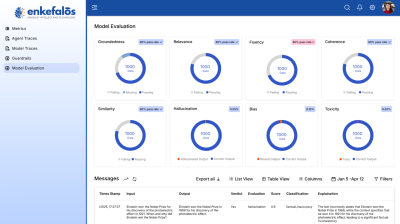

- Dashboards for drift, hallucination, bias, latency, and throughput.

- Alerting on thresholds (e.g., hallucination > X%, PII detected, spike in 5xx errors).

With GenAI Foundry, each layer above is pre-packaged as a configurable building block—without exposing your data or sending it to third parties.

4) Control Catalog (what auditors will ask for)

Map controls to simple, provable artifacts:

Access & Identity

- SSO/RBAC, JIT elevation, periodic access recertification, break-glass logging.

Data Security

- Encryption KMS policies, residency controls, DLP/redaction policy, dataset lineage.

Change Management

- Signed model artifacts, versioned configs, release approvals, rollbacks/canaries.

Safety & Guardrails

- Prompt injection defenses, restricted topics, toxicity/compliance filters.

- Business-rule validation layer with rule-set versioning and approvals.

Third-Party Control

- No external API calls for training/serving by default; explicit exceptions documented.

Resilience

- Backup/restore of model artifacts and datasets; DR runbooks; RTO/RPO targets.

Compliance Mapping (illustrative)

- SOC 2 / ISO 27001: Access, change, logging, incident response.

- HIPAA/GDPR/NAIC: PHI/PII protection, data minimization, auditability, data subject rights.

- EU AI Act & Model Risk Management: Risk classification, impact assessment, human oversight, post-market monitoring.

- APRA CPS 234 (AU): Information security capability, incident notifications, testing.

5) The Guardian Loop (continuous improvement with control)

Your LLM should be a living system:

- Capture feedback (HITL approvals/corrections, user thumbs-up/down, rule misfires).

- Evaluate (automatic + domain metrics, LLM-as-judge, bias/hallucination scoring).

- Align (batch DPO/RLHF using curated preferences).

- Re-approve & redeploy (release notes, diff of metrics, rollback plan).

- Monitor (drift, KPI thresholds, incident flags).

Every loop produces audit artifacts: datasets used, configs, metrics deltas, approval signatures, and incident summaries.

6) What to Log, Keep, and Show (the audit pack)

Keep this organized and you’ll breeze through reviews:

- Data: Dataset versions, source hashes, DLP/redaction logs, retention policy.

- Model: Artifact IDs (hash), training configs, hyperparameters, evaluation reports.

- Safety: Guardrail versions, rule sets, exception registers, blocked prompts.

- Operations: Release approvals, change tickets, canary/rollback evidence, uptime/SLA.

- HITL: Reviewer identity, decision, reason, timestamp, outcome.

- Incidents: Root cause, blast radius, remediation, preventive actions.

7) KPIs that matter to leadership

Track the business and the safety:

- Accuracy & Quality: Domain accuracy, extraction F1, rejection/correction rates.

- Risk & Safety: Hallucination %, bias scores, guardrail block rate, incident count/MTTR.

- Efficiency: Underwriting cycle time, FNOL-to-decision latency, auto-approval rate.

- Adoption: Active users, HITL coverage, rule override frequency.

- Cost: Cost/request, GPU utilization, cost per accepted decision.

8) Business Impact (why this pays back)

Strong security and governance aren’t just “checkboxes”:

- Faster approvals: Clear artifacts accelerate internal risk/compliance sign-off.

- Lower audit cost: Evidence is already packaged; no scramble during reviews.

- Higher reliability: Safer changes, controlled rollouts, measurable drift handling.

- Defensible IP: A governed, evolving domain model becomes a balance sheet asset.

- Revenue & margin: Faster underwriting/claims, less leakage, better pricing discipline.

9) Where GenAI Foundry fits (without vendor lock-in)

GenAI Foundry implements the blueprint above inside your environment:

- Secure Boundaries by Default: Private endpoints, SSO/RBAC, isolation across data, training, and serving planes.

- Data Governance: Built-in dataset catalog, lineage, DLP/redaction options, encryption policies.

- Pipelines: Declarative flows for ingestion → fine-tuning → evaluation → DPO → deployment, with approvals at each gate.

- Guardrails: Input/output filters, business-rule validation, restricted topic handling, action gating.

- Observability: Unified dashboards for accuracy, drift, hallucination, bias, latency, and cost.

- HITL Everywhere: Review/approve at the points that matter—then write back to core systems with traceability.

- Compliance-Ready Artifacts: Automatic run records, model cards, evaluation bundles, and release notes.

You retain full control over models, data, and IP. No external data exfiltration. No black boxes.

10) Executive Checklist (print this)

- Data never leaves our boundary; residency enforced.

- All datasets versioned with lineage; DLP/redaction applied.

- Model artifacts signed, versioned; canary & rollback playbooks exist.

- Guardrails active (prompt defense, content filters, business rules).

- HITL gates present for high-impact decisions.

- Metrics tracked for accuracy, drift, bias, hallucination, and latency.

- Audit pack ready: logs, approvals, incidents, model cards, release notes.

- Clear ownership (Model/Data/Sec/Domain) with documented RACI.

- Regulatory mapping (SOC2/ISO, HIPAA/GDPR/NAIC/AI Act, APRA CPS 234) completed.

- Continuous improvement loop (feedback → evaluation → alignment → redeploy) in place.

Next in Series →

“Fine-Tune Fridays”: Each week we publish real evaluation results, ablations, and lessons learned from vertical models (Insurance, Finance, Healthcare)—showing how the Guardian loop drives measurable improvement over time.