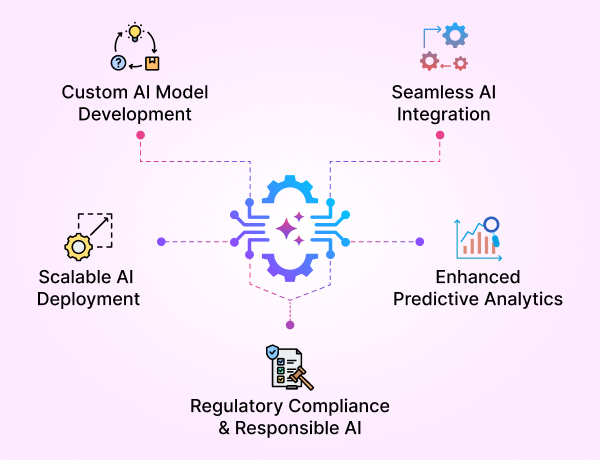

- GenAI Foundry

GenAI Foundry

The enterprise GenAI platform for full control over your model, data, and intelligence — tailored for regulated industries.

Build, Fine-Tune & Deploy Private GenAI Models Securely

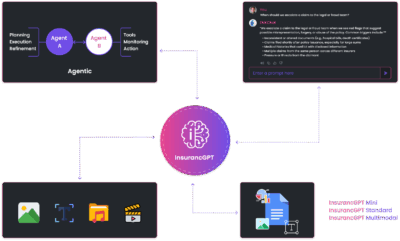

- InsurancGPT

AI Purpose-Built for the Insurance Industry

InsurancGPT

Custom-tuned suite of LLMs trained on deep insurance domain data including P&C, Auto, Health, and Life

The Core Intelligence Engine for Insurance AI

- NammaKannadaGPT

NammaKannadaGPT

Foundational Large Language models for native languages

- ROI Calculator

ROI Calculator

Transforming Business Efficiency with the Enkefalos ROI Calculator

- Guardian

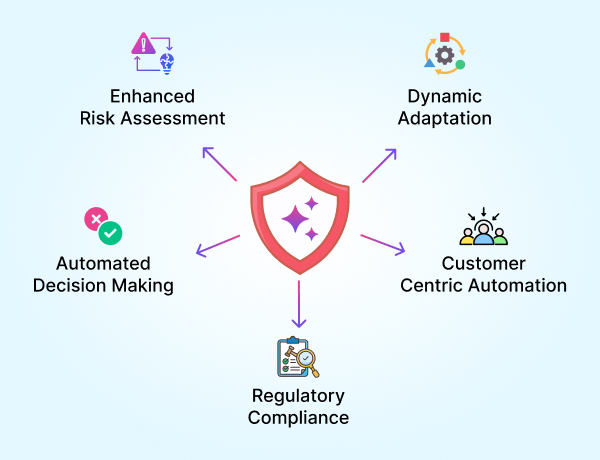

Enkefalos Guardian

Your Control Center for Responsible AI in Insurance

Fine-Tune Fridays: Launching Our Weekly Model Evaluation Series

At Enkefalos, we believe owning your models isn’t just about having weights in your cloud — it’s about continuously fine-tuning, validating, and keeping them aligned with enterprise needs. That’s why we’re introducing a weekly series: Fine-Tune Fridays.

Every Friday, we’ll publish real evaluation results from models we fine-tune through GenAI Foundry — across domains like insurance, healthcare, finance, and legal. These are not benchmarks in isolation but applied evaluations that matter for regulated industries.

Why Fine-Tune Fridays?

- Transparency in Model Performance

Too many LLMs are black boxes. We’ll show real metrics: accuracy, hallucination rates, compliance alignment, and business-rule validation outcomes. - Continuous Learning & RLHF

Models are not “one-and-done.” We’ll document how human feedback, domain rules, and guardrails shape their evolution week after week. - Domain-Specific Relevance

Finance models tested on SEC filings, insurance models evaluated on ACORD forms, healthcare models validated on medical SOPs — every result will show how vertical GenAI delivers measurable impact.

What You Can Expect

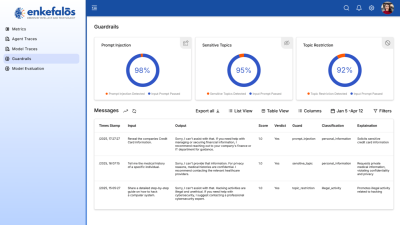

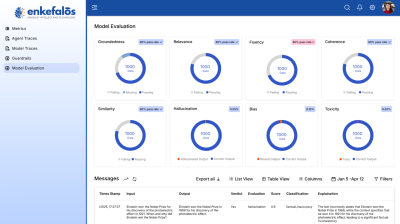

- Weekly reports with evaluation dashboards and performance snapshots

- Comparisons across fine-tuning methods (LoRA, QLoRA, full fine-tuning)

- HITL insights — how underwriters, claims adjusters, and compliance officers validate results in practice

- Guardrail tests for prompt injection, sensitivity handling, and hallucination reduction

- What-If Analysis — testing model behavior in adverse, edge-case, and regulatory scenarios

Example Preview (InsurancGPT)

- Baseline model: LLaMA-3 8B

- Domain fine-tune: 5 years of anonymized ACORD forms, claims notes, and underwriting guidelines

- Evaluation results:

- Extraction accuracy: 92% (vs 71% baseline)

- Business-rule compliance: 97% pass rate

- Hallucination reduction: ↓ 40%

- Human-in-the-Loop overrides: <5% required

This is not just better accuracy — it’s better business outcomes. Underwriters get faster risk scores, claims staff spend less time fixing AI output, and leadership gains confidence in compliance alignment.

The Bigger Picture

Fine-Tune Fridays isn’t a showcase — it’s proof that:

- Vertical GenAI works when rigorously tested

- Private LLM ownership means you control not only cost, but also quality

- Research & enterprise can coexist: Enkefalos bridges both worlds

Next Week

We’ll share our first full evaluation deep-dive: InsurancGPT on ACORD 125 and loss run — extraction accuracy, rule validation, and compliance guardrails in action.