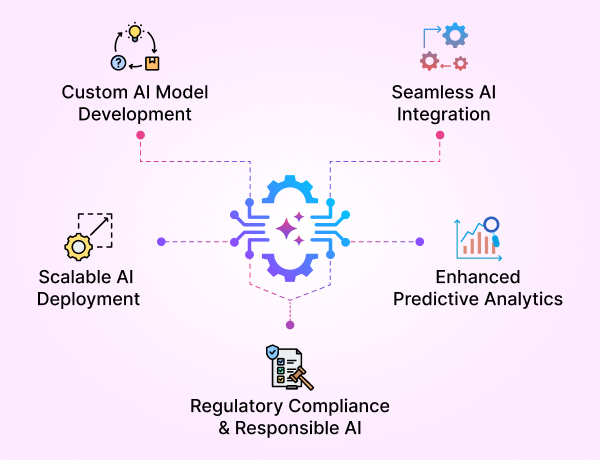

- GenAI Foundry

GenAI Foundry

The enterprise GenAI platform for full control over your model, data, and intelligence — tailored for regulated industries.

Build, Fine-Tune & Deploy Private GenAI Models Securely

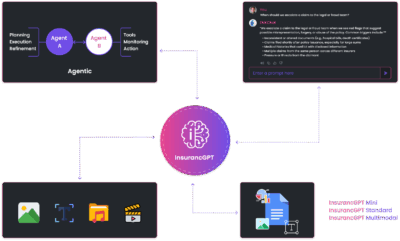

- InsurancGPT

AI Purpose-Built for the Insurance Industry

InsurancGPT

Custom-tuned suite of LLMs trained on deep insurance domain data including P&C, Auto, Health, and Life

The Core Intelligence Engine for Insurance AI

- NammaKannadaGPT

NammaKannadaGPT

Foundational Large Language models for native languages

- ROI Calculator

ROI Calculator

Transforming Business Efficiency with the Enkefalos ROI Calculator

- Guardian

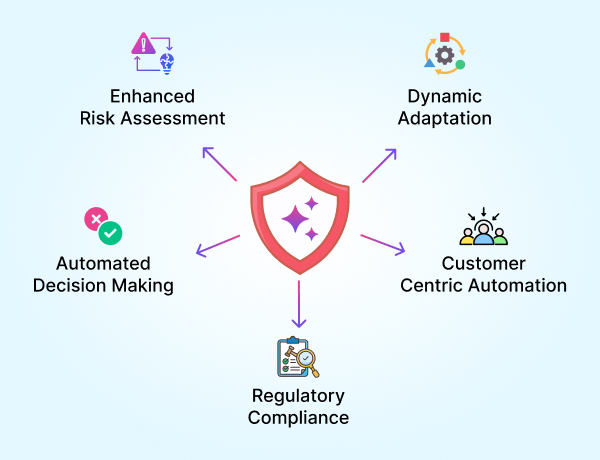

Enkefalos Guardian

Your Control Center for Responsible AI in Insurance

GenAI Foundry: Own Your Model, Your Data, and Your Intelligence

In Articles 1–2, we argued that enterprises must own their LLMs and that vertical GenAI (domain-specific models) delivers the real performance, compliance, and ROI. This article shows how to do it—end to end—with GenAI Foundry.

GenAI Foundry is a private, enterprise GenAI platform that lets you build, fine-tune, evaluate, and deploy your own models—securely in your cloud or on-prem—while owning the model, the data, and the IP.

What GenAI Foundry Is (and isn’t)

- What it is: A full-stack orchestration and governance layer for the LLM lifecycle

- Data → fine-tuning → evaluation → guardrails → RLHF → deployment → monitoring—designed for regulated industries.

- What it isn’t: A public API, a single model, or a black box. Foundry is your private operating system for GenAI, not another vendor-controlled endpoint.

Core Business Outcomes

- Ownable IP: You keep the weights/adapters, evaluation sets, prompts/policies, and domain data derivatives—valuable, defensible assets that increase enterprise valuation.

- Regulatory confidence: Built-in controls (audit trails, explainability hooks, redaction, policy enforcement) to meet HIPAA/GDPR/NAIC/AI-Act expectations.

- Cost & time advantage: Deliver domain-grade models at a fraction of historical cost and time, without runaway per-token API bills.

- Faster value: Go from “files on day 1” to production endpoints in weeks, not quarters—while continuously improving via feedback loops.

The Platform — Modules at a Glance

- Model Hub

- Curated open-weight and pre-tuned domain models (text, multimodal, speech, reasoning).

- One-click provisioning into your VPC/on-prem; versioned model artifacts; rollback/compare.

- Data Manager

- Secure ingestion from object stores and enterprise systems (docs, CSV, PDFs, Excel, PNG, JPEG, forms, logs).

- PII detection/redaction, de-duplication, labeling, data lineage; dataset snapshots for reproducibility.

- Training & Fine-Tuning

- Parameter-efficient tuning (e.g., adapter-based) and full-precision options when needed.

- Configurable hyperparameters (epochs, optimizers, schedulers), curriculum/continual learning, mixed-precision, quantization-aware paths.

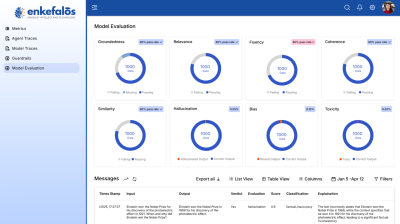

- Evaluation Studio

- Automatic offline evals (exact match, BLEU/ROUGE, factuality checks), risk & safety checks (toxicity, bias, jailbreak susceptibility), and domain task suites (claims accuracy, compliance recall, ICD/CPT mapping, etc.).

- Human-in-the-loop reviews with dispute/consensus flows.

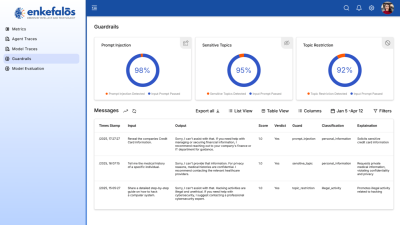

- Guardrails & Policy Engine

- Prompt-injection detection, content policy enforcement, sensitive-topic filters, grounded Ness/factuality checks, citation enforcement.

- Audit logs with full traceability.

- RLHF / DPO Pipeline

- Collect preferences from SMEs; run preference-optimization to align the model with enterprise-specific judgment; schedule recurring refreshes.

- Knowledge Services (RAG + Knowledge Graph)

- Retrieval-augmented generation over your corpora with hybrid retrieval.

- Optional knowledge graph construction for high-precision, structured reasoning (entities, relations, constraints).

- Playground & Test Console

- Safe sandbox to compare model variants, prompts, guardrail policies, and retrieval sources.

- Deployment & Serving

- Private inference endpoints; autoscaling

- APIs for apps, copilots, and back-office automations.

- Governance, Security, and Observability

- SSO/IAM integration (RBAC/ABAC), encryption in transit/at rest, secrets vaulting, data-residency controls, and complete auditability.

- Experiment tracking, drift/hallucination monitoring, and model health alerts wired into retraining queues.

The Operating Model (How You Deploy)

- Private Deployment (recommended for regulated): Foundry runs entirely inside your VPC/on-prem, with all artifacts (weights, logs, datasets) staying in your environment.

- Managed Installation: We provision, harden, and maintain Foundry inside your environment under an operating agreement (you still own the artifacts).

- SaaS (optional, non-regulated): Multi-tenant control plane with isolated data planes; typically used for prototypes before moving to private.

The End-to-End Flow (What Happens Under the Hood)

- Ingest & Curate

- You connect storage and drop in domain corpora (docs, CSV, PDFs, Excel, PNG, jpeg, forms, logs).

- PII/secret scanning, redaction, chunking, labeling, split into train/Val/test with lineage.

- Tune

- Choose a base model (e.g., 4–30B text or a vision-language model).

- Run adapter-based tuning on curated datasets; Foundry handles scheduling, retries, checkpoints, and artifact versioning.

- Evaluate

- Automated task suites + safety checks + SME reviews.

- Compare model variants; promote only those meeting thresholds.

- Guard & Align

- Configure content/safety policies, regulated-term filters, and grounded Ness rules (e.g., “cite source if confidence < X”).

- Collect SME preferences and run DPO cycles to sharpen judgment.

- Deploy

- Roll out private endpoints behind your IAM proxies; set quotas and SLOs; enable logging and anonymization policies.

- Observe & Improve

- Monitor hallucination/drift, user feedback, and costs; schedule incremental fine-tunes; keep the model fresh with continuous learning.

No vendor names, full functionality: Foundry includes a production-grade orchestration layer and an experiment/evaluation tracker—without tying you to third-party tool brands.

Security & Compliance (Built-In)

- Data never leaves your trust boundary; an air-gapped option for on-prem.

- Least-privilege IAM, network isolation, VPC peering/private links.

- Encryption in transit/at rest; KMS integration.

- Full auditability: immutable logs for prompts, model versions, guardrail decisions, and outputs.

- Explainability hooks: evidence traces, citations, and decision rationales to support audits.

What You Own (Your IP)

- Model artifacts: base model selections, tuned adapters, merged weights.

- Datasets & eval suites: curated corpora, splits, golden-sets, test cases.

- Policies & prompts: guardrail rules, templates, system prompts, chain graphs.

- Operational knowledge: playbooks, benchmarks, drift thresholds.

This portfolio forms a compounding moat—each cycle makes your model smarter for you, not for a public provider.

Business Impact — Example KPIs You Can Track

- Underwriting/claims: time-to-decision ↓, leakage/fraud detection ↑, straight-through processing ↑.

- Compliance: audit prep time ↓, flagged-risk recall ↑, “explainability coverage” ↑.

- Contact centers: AHT ↓, FCR ↑, deflection ↑, CSAT ↑.

- Productivity: analyst hours saved, report generation time ↓.

- Cost control: API expenses avoided, infra spend per 1k requests ↓, $/correct answer ↓.

- Model quality: hallucination rate ↓, groundedness ↑, domain accuracy ↑.

Example Deployments (What Teams Actually Ship)

- InsurancGPT:

Underwriting assist, coverage checks, loss-cause reasoning, claims triage; policy summarization with citations; fraud signals; audit-ready transcripts. - ForensicsGPT:

Evidence extraction, chain-of-custody narratives, entity linking across documents, fact-pattern reconstruction with explanation trails. - NammaKannadaGPT:

A regional language LLM for citizen services and enterprise workflows; tokenizer + domain corpora; culturally/linguistically aligned outputs

Competitive Positioning (When You’re Asked, “Why Not X?”)

- Versus public APIs: No data leaves; no behavior drift; no per-token surprises; you own the intelligence.

- Versus hyperscaler “studios”: Foundry is cloud-portable and vendor-neutral—no platform lock-in; artifacts remain yours.

- Versus data platforms: Foundry is model-lifecycle–first (tuning, guardrails, RLHF, serving) with deep domain packs; it integrates with your data lakes/warehouses rather than replacing them.

Rollout Plan (Typical 4–8 Weeks)

Week 0–1: Foundation

- VPC/on-prem installation, IAM/SSO, storage connections, security baselines.

Week 2–3: Data & Baselines

- Curate datasets; run first baseline; define eval & safety thresholds.

Week 4–5: Fine-Tune & Guard

- Adapter tuning; configure guardrails; SME review; DPO cycle #1.

Week 6: Pilot Deploy

- Private endpoints, integration with sandbox app, SLOs, dashboards.

Week 7–8: Production

- Canary rollout; KPIs live; cost caps; retraining cadence agreed.

Pricing & Commercial (How You Pay—Not How You Lose IP)

- Annual platform license (includes updates & security patches).

- Managed install & support tires (SLA options).

- Professional services (data curation, eval design, migration).

- Domain model packs (Insurance, Healthcare, Finance) to accelerate time-to-value.

(Your company owns the models, datasets, policies, and evaluation suites. The platform is licensed—like leasing a secured factory where you own everything you produce.)

FAQ (Investor & Buyer Hot Buttons)

- Who owns the GPUs? You do (cloud or on-prem). Foundry schedules and optimizes, but does not require our hardware.

- What models do you support? A broad set of open-weight text and multimodal families; we keep adding adapters/recipes.

- Can we keep using public APIs? Yes—hybrid setups are supported, with routing and policy controls.

- How do you handle drift/hallucination? Continuous evals, grounded Ness checks, SME reviews, and scheduled DPO refreshes.

- What if a regulator asks “why”? We log prompts, retrieval context, policy decisions, and model versions; you can generate evidence packs.

The Bottom Line

Owning your model is owning your intelligence. With GenAI Foundry, you get a repeatable, governed manufacturing line for enterprise LLMs—so every sprint compounds your advantage.

Your data. Your model. Your IP.

That’s the difference.

Next in Series →

In the next article, we’ll take a closer look at InsurancGPT in Action — our domain-specific model purpose-built for the insurance industry.

We’ll showcase how InsurancGPT transforms underwriting, claims automation, fraud detection, and compliance workflows, while maintaining strict security and regulatory alignment.

Stay tuned — the future of regulated enterprise AI isn’t just private, it’s vertical, intelligent, and business-ready.