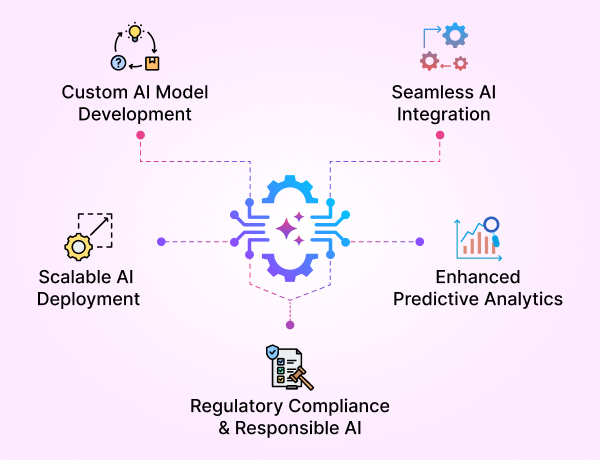

- GenAI Foundry

GenAI Foundry

The enterprise GenAI platform for full control over your model, data, and intelligence — tailored for regulated industries.

Build, Fine-Tune & Deploy Private GenAI Models Securely

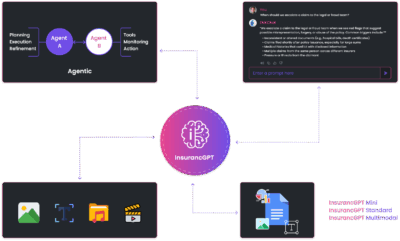

- InsurancGPT

AI Purpose-Built for the Insurance Industry

InsurancGPT

Custom-tuned suite of LLMs trained on deep insurance domain data including P&C, Auto, Health, and Life

The Core Intelligence Engine for Insurance AI

- NammaKannadaGPT

NammaKannadaGPT

Foundational Large Language models for native languages

- ROI Calculator

ROI Calculator

Transforming Business Efficiency with the Enkefalos ROI Calculator

- Guardian

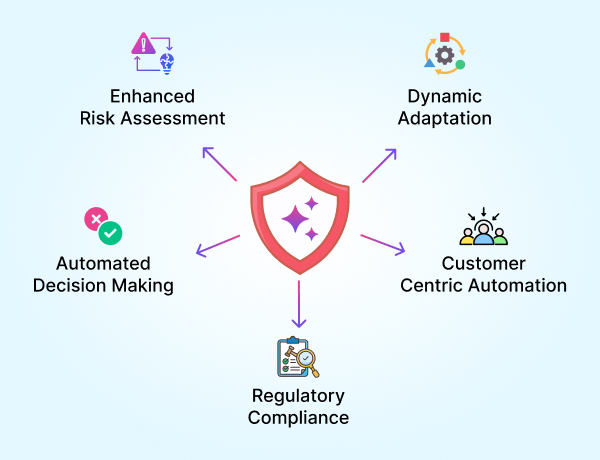

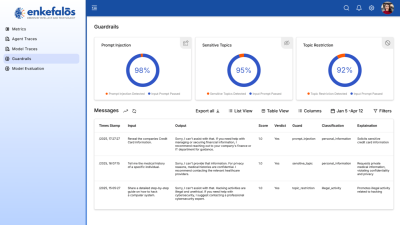

Enkefalos Guardian

Your Control Center for Responsible AI in Insurance

Vertical GenAI and the Power of Domain-Specific Models

In the first article of this series, we explored why enterprises should own their Large Language Models (LLMs). Now, let’s take the next step: why vertical GenAI — models fine-tuned for specific industries — is the real game changer.

Why Vertical AI Matters

Generic LLMs are powerful but broad. They excel at everyday tasks but often stumble when accuracy, compliance, and deep domain knowledge are required. This is where vertical AI shines:

- Accuracy in High-Stakes Environments

In domains like finance, healthcare, insurance, or law, even minor errors have outsized consequences. Vertical models tuned on industry data achieve far greater precision and reliability. - Compliance and Regulation

Generic APIs aren’t built with HIPAA, GDPR, NAIC, or industry-specific compliance in mind. Domain-specific models, however, can be fine-tuned to respect regulatory frameworks with explainability, observability, and audit logs. - Business Impact

Vertical GenAI integrates directly into workflows: underwriting in insurance, risk modeling in banking, patient care in healthcare, or contract review in law. The ROI is clearer, faster, and measurable.

Real-World Examples of Vertical GenAI

- BloombergGPT (Finance): Bloomberg built a 50B parameter model on decades of proprietary financial data, in addition to general-purpose text, Bloomberg created a “vertical AI” specifically optimized for financial tasks. This specialized training approach allowed BloombergGPT to significantly outperform similarly sized general-purpose LLMs on financial benchmarks, demonstrating the critical importance of domain-specific expertise for industries with specialized language.

This superior performance enables BloombergGPT to achieve new levels of accuracy and relevance for financial professionals. The model improves a wide range of existing financial applications, including sentiment analysis, named entity recognition, and news classification. It can also generate Bloomberg Query Language (BQL) from natural language, streamlining data analysis for users. Read more

- BioGPT (Healthcare): BioGPT is a model developed by Microsoft Research and published in 2022. It was specifically trained on 15 million abstracts from PubMed to enhance its understanding of biomedical concepts and terminology. This specialized training allowed it to achieve state-of-the-art results at the time on several biomedical Natural Language Processing (NLP) benchmarks. For instance, it achieved an accuracy of 78.2% on the PubMedQA question-answering dataset.

The model’s ability to efficiently process vast quantities of biomedical text is intended to accelerate tasks like drug discovery, disease classification, and literature mining. This provides researchers and healthcare professionals with a powerful tool to generate fluent descriptions, extract relationships between entities like genes and drugs, and summarize research papers. Read more

- Med-PaLM 2 (Healthcare QA): Med-PaLM 2 was developed by Google Researchand announced in March 2023. It was fine-tuned for the healthcare industry using a massive, domain-specific dataset.

This “vertical tuning” allowed it to be the first AI model to achieve an “expert” test-taker level performance on the US Medical Licensing Examination (USMLE)-style questions within the MedQA dataset. In these tests, Med-PaLM 2 achieved an accuracy of 86.5%, a significant improvement over earlier models.

The model is also evaluated for its ability to generate safe, factual, and helpful long-form answers. Med-PaLM 2 showed improvements in physician-judged answer quality for consumer medical questions compared to its predecessor. However, it is important to note that the term “expert doctor” level refers to exam performance and not to the complexities of real-world clinical practice, which still requires human medical expertise Read more

Breaking the Myth: You Don’t Need Bloomberg’s Millions

When BloombergGPT launched, it looked like vertical AI was reserved for Million-dollar giants. But today:

- Open models like LLaMA, Mistral, Qwen, Gemma, Falcon, Deepseek R1 and Phi provide strong foundations.

- Efficient fine-tuning techniques like LoRA and QLoRA make training possible with limited resources.

- Enterprises can now own a vertical LLM for fraction of the historical cost and time.

- With GenAI Foundry, the entire process — from data upload and fine-tuning to evaluation, deployment and reinforcement learning — is simplified into a low-code platform.

Vertical GenAI is no longer out of reach. It’s affordable, faster, and designed for immediate impact.

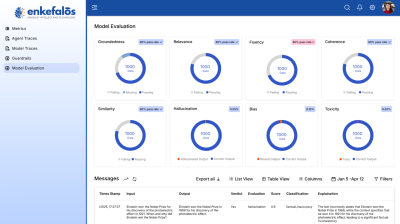

How GenAI Foundry Makes It Simple

GenAI Foundry brings vertical GenAI within every enterprise’s reach:

- Low-Code Orchestration: Upload PDFs, CSVs, medical SOPs, or contracts to fine-tune instantly.

- Evaluation & Guardrails: Monitor accuracy, bias, and compliance alignment.

- Continuous Learning: Apply RLHF to keep models current with human feedback.

- Flexible Deployment: Run securely on AWS, Azure, GCP, or on-prem infrastructure.

With GenAI Foundry, enterprises can own vertical AI at a fraction of the historical cost and time.

Next in Series →

In the next article, we’ll introduce GenAI Foundry itself — showing how it orchestrates the full model lifecycle with built-in security, evaluation, and deployment pipelines.

Stay tuned — the future of enterprise AI is vertical, owned, and secure.