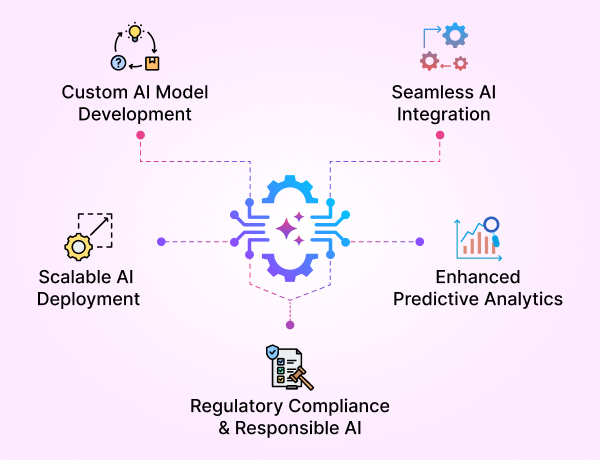

- GenAI Foundry

GenAI Foundry

The enterprise GenAI platform for full control over your model, data, and intelligence — tailored for regulated industries.

Build, Fine-Tune & Deploy Private GenAI Models Securely

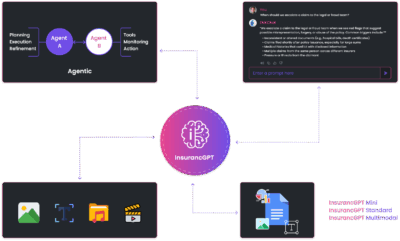

- InsurancGPT

AI Purpose-Built for the Insurance Industry

InsurancGPT

Custom-tuned suite of LLMs trained on deep insurance domain data including P&C, Auto, Health, and Life

The Core Intelligence Engine for Insurance AI

- NammaKannadaGPT

NammaKannadaGPT

Foundational Large Language models for native languages

- ROI Calculator

ROI Calculator

Transforming Business Efficiency with the Enkefalos ROI Calculator

- Guardian

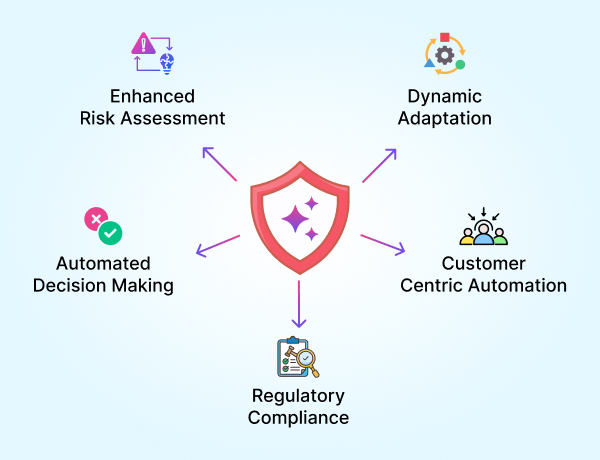

Enkefalos Guardian

Your Control Center for Responsible AI in Insurance

Why Enterprises Should Own Their Large Language Models (LLMs)

In today’s AI-driven world, enterprises are quickly recognizing the transformative power of Large Language Models (LLMs). These models — capable of understanding, generating, and reasoning over human language — are not just tools for automation; they are emerging as key assets for competitive advantage. However, the default approach of accessing LLMs via cloud APIs from providers like OpenAI, Google, and Anthropic comes with increasing risks and costs. For regulated enterprises especially, the time has come to rethink this dependency.

1. Your Data Trains the Model — But You Don’t Own the Model

Many enterprises interact with LLMs via API — whether through public clouds like OpenAI’s own platform, or through secure offerings such as Azure OpenAI or AWS Bedrock.

While some providers now claim they don’t train on your data, and even offer private deployments, you still don’t own the model or the intelligence derived from it. You’re simply renting access to someone else’s brain.

Whether you’re an insurance provider, bank fine-tuning financial advice or a hospital triaging symptoms, the strategic value is still being built outside your walls.

By owning your own LLM, trained on your domain data and living in your infrastructure, you reclaim that value. You build institutional knowledge into your model and generate IP that belongs to your organization, not a cloud vendor.

2. Cost Control: Stop Paying Per Prompt Forever

Many enterprises are now paying upwards of $8,000 to $15,000 monthly in GenAI API costs. These fees often scale unpredictably, with token limits, rate caps, and increased pricing tiers as adoption grows. Even mid-sized organizations in regulated industries often report $10,000–$15,000/month just on GenAI usage.

Owning your LLM changes the cost equation:

- Initial infra + fine-tuning cost: Starting from $4k–$6k/month for mid-sized enterprises (single model), scaling up to $10k–$12k/month for larger setups running multiple models

- Perpetual use: No metered cost per prompt

- Fine-tune once, reuse forever

In regulated industries where compliance and scale matter, owning becomes cheaper and more predictable.

Public vs. Private LLM — A Comparison Table

| Feature | Public LLM (API-based) | Private LLM (Self-hosted) |

| Data Privacy | Data sent to external servers | Data remains inside your environment |

| Cost | Pay-per-token or API call | Fixed infra cost, unmetered usage |

| Customization | Limited or none | Fully customizable and fine-tunable |

| Model Ownership | Provider owns and updates | You own and control the model |

| Compliance | Risk of violations (GDPR, HIPAA) | Full control enables easier compliance |

| Performance Transparency | Black box | Observable, explainable behavior |

| Vendor Lock-in Risk | High | None |

| IP Creation | Improves provider model | Generates proprietary enterprise IP |

| Latency | Medium/High (due to internet round trips) | Low (local/private cloud inference) |

| Auditability | Limited | Full logs, traceability |

3. Security and Privacy: Your Data Never Leaves

With closed APIs, prompts and responses are routed over the internet, processed on external servers, and often logged for training. This poses enormous risks:

- Data breaches

- Inability to meet GDPR, HIPAA, or regional data laws

- Audit difficulty and lack of traceability

A self-hosted, private LLM ensures:

- Full control over all logs, prompts, and responses

- Encryption and isolation inside your own VPC or on-prem infra

- No data leakage risk

In sectors like banking, healthcare, and insurance, this is non-negotiable.

4. No Vendor Lock-in. Total Platform Agility.

Today, if public LLM providers change their pricing, model behavior, or terms of service, your application suffers instantly. We’ve seen:

- API rate changes without notice

- Downtime during critical hours

- Model behavior shifts due to quiet retraining

If your application relies on these APIs, you’re at the mercy of a black-box vendor.

Owning your LLM eliminates this fragility:

- You choose which model version to run

- You decide when to update or roll back

- You can deploy on any cloud or on-prem

It’s the same philosophy that drove enterprises from SaaS lock-in to owning cloud databases and microservices. The AI stack needs to evolve the same way.

5. Performance and Customization

Third-party models are generalists. They may be good at trivia or general summarization, but they are not experts in your domain. Whether you’re an insurer handling loss claims, a pharma firm analyzing molecules, or a law firm parsing regulation — you need your model to speak your language.

Fine-tuning your own model:

- Increases accuracy in domain-specific tasks by 20%+

- Reduces hallucinations

- Creates predictable, trusted behavior

And with tools like GenAI Foundry, you don’t need a PhD team to do it.

6. The LLM is Your IP. And That Increases Enterprise Valuation.

When you fine-tune and own your LLM, you are creating:

- A proprietary asset

- A competitive moat

- Defensible AI IP

For many AI-first companies, the valuation multiplier on owned models can be 2-3x higher than those simply using APIs. Investors increasingly ask: do you own the model? Do you own the data?

If the answer is yes, it reflects not only tech leadership, but also financial leverage.

7. You’re Already Owning Infra, Why Not Models?

Cloud transformed how we hosted applications. You still owned the database. You controlled your VPC. You had the ability to migrate and choose vendors.

With GenAI APIs, you own nothing.

- You don’t know how the model works

- You can’t explain it to regulators

- You can’t debug or adapt it

Owning your model gives you back the operational flexibility, cost control, and transparency that made cloud valuable in the first place.

8. Real Risks of Platform Dependency

There are real-world examples of LLM APIs being:

- Rate-limited for enterprise users during peak hours

- Delisted or deprecated without migration paths

- Blocked by regional governments or compliance authority

Your app is not future proof unless you control the engine.

Ready to Take Back Control?

GenAI Foundry by Enkefalos enables enterprises to:

- Deploy their own private, open-source LLMs

- Fine-tune with a low-code interface

- Run securely on any infrastructure

- Evaluate and improve continuously with built-in RLHF, guardrails, and monitoring

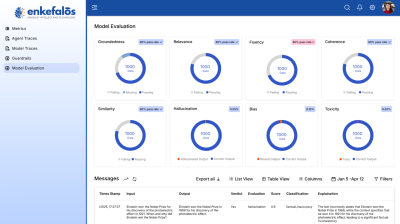

Built-in Orchestration and Observability

GenAI Foundry supports a full lifecycle orchestration engine — from data upload, preprocessing, and model fine-tuning to reward optimization and retraining.

It includes monitoring hooks to track output quality, hallucination, bias, and model drift. This observability layer feeds into a continuous feedback loop, helping improve model behavior through post-deployment evaluations and reinforcement learning workflows.

Own your LLM. Own your intelligence.

Next in Series →

In the next article, we explore why Verticalized LLMs — trained on domain-specific data — are unlocking powerful advantages for enterprises. We’ll also show how owning your IP enhances valuation and keeps your data secure.

Stay tuned!